on

768 words - 4 minutes to read

How to ingest an S3 file into an RDS PostgreSQL table ?

The problem

I’m using Redshift for a while now, and one feature I find particularly useful, is the ability to load a table from the content of an S3 file:

COPY table_name

FROM 's3://bucket-name/path/file.ext'

iam_role 'arn:aws:iam::<aws-account-id>:role/<role-name>'

region 'region-code';

Lately, I needed to do the very same thing with a PostgreSQL database, hosted on RDS.

The good news is : YES WE CAN !

The solution

Installation

AWS pre-installs (among other things) 2 extensions on our RDS :

- aws_commons

- aws_s3

Now, since we need to interact with S3, we simply need to run the following command, assuming

our user is a superuser or has database owner privileges:

CREATE EXTENSION aws_s3 CASCADE;

This command installs both aws_commons and aws_s3.

Okay, that was the easy part.

Well, now we have to work on the necessary IAM role for our RDS instance…and that’s where we’ll find the first pitfall…

Under the hood, installing the aws_s3 extension also adds an IAM role, managed by AWS (and therefore, read only for us)

to interact with plenty of AWS services.

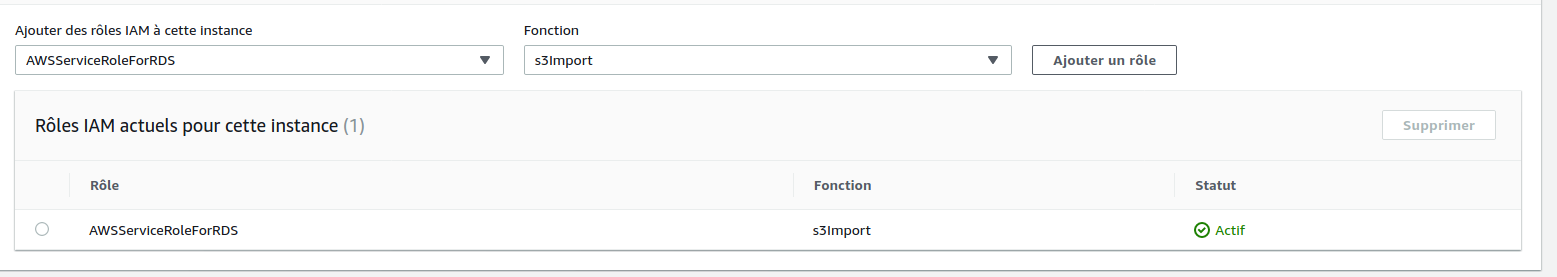

As we can see below, the role is linked to the S3Import function :

The problem is, we can only link a single role to a function and this one does not have any access to our S3 bucket, therefore this cannot work.

In the end, even if the policy is super short in the doc, here is what we actually need :

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "s3import",

"Action": [

"s3:GetObject",

"s3:ListBucket"

],

"Effect": "Allow",

"Resource": [

"arn:aws:s3:::bucket-name",

"arn:aws:s3:::bucket-name/*"

]

},

{

"Effect": "Allow",

"Action": [

"rds:CrossRegionCommunication"

],

"Resource": "*"

},

{

"Effect": "Allow",

"Action": [

"ec2:AllocateAddress",

"ec2:AssociateAddress",

"ec2:AuthorizeSecurityGroupIngress",

"ec2:CreateNetworkInterface",

"ec2:CreateSecurityGroup",

"ec2:DeleteNetworkInterface",

"ec2:DeleteSecurityGroup",

"ec2:DescribeAddresses",

"ec2:DescribeAvailabilityZones",

"ec2:DescribeCoipPools",

"ec2:DescribeInternetGateways",

"ec2:DescribeLocalGatewayRouteTables",

"ec2:DescribeLocalGatewayRouteTableVpcAssociations",

"ec2:DescribeLocalGateways",

"ec2:DescribeSecurityGroups",

"ec2:DescribeSubnets",

"ec2:DescribeVpcAttribute",

"ec2:DescribeVpcs",

"ec2:DisassociateAddress",

"ec2:ModifyNetworkInterfaceAttribute",

"ec2:ModifyVpcEndpoint",

"ec2:ReleaseAddress",

"ec2:RevokeSecurityGroupIngress",

"ec2:CreateVpcEndpoint",

"ec2:DescribeVpcEndpoints",

"ec2:DeleteVpcEndpoints",

"ec2:AssignPrivateIpAddresses",

"ec2:UnassignPrivateIpAddresses"

],

"Resource": "*"

},

{

"Effect": "Allow",

"Action": [

"sns:Publish"

],

"Resource": "*"

},

{

"Effect": "Allow",

"Action": [

"logs:CreateLogGroup"

],

"Resource": [

"arn:aws:logs:*:*:log-group:/aws/rds/*",

"arn:aws:logs:*:*:log-group:/aws/docdb/*",

"arn:aws:logs:*:*:log-group:/aws/neptune/*"

]

},

{

"Effect": "Allow",

"Action": [

"logs:CreateLogStream",

"logs:PutLogEvents",

"logs:DescribeLogStreams"

],

"Resource": [

"arn:aws:logs:*:*:log-group:/aws/rds/*:log-stream:*",

"arn:aws:logs:*:*:log-group:/aws/docdb/*:log-stream:*",

"arn:aws:logs:*:*:log-group:/aws/neptune/*:log-stream:*"

]

},

{

"Effect": "Allow",

"Action": [

"kinesis:CreateStream",

"kinesis:PutRecord",

"kinesis:PutRecords",

"kinesis:DescribeStream",

"kinesis:SplitShard",

"kinesis:MergeShards",

"kinesis:DeleteStream",

"kinesis:UpdateShardCount"

],

"Resource": [

"arn:aws:kinesis:*:*:stream/aws-rds-das-*"

]

},

{

"Effect": "Allow",

"Action": [

"cloudwatch:PutMetricData"

],

"Resource": "*",

"Condition": {

"StringEquals": {

"cloudwatch:namespace": "AWS/RDS"

}

}

}

]

}

Once created, we we’ll need to :

- unlike the managed role from the

s3Importfunction; - link the new role to the

s3Importfunction;

Careful now, swapping and applying the role may takes a few minutes, don’t believe what the AWS console tells you.

That’s it now, we’re all set !

Usage

To load the content of a CSV file from S3, we’ll just need to :

SELECT aws_s3.table_import_from_s3 (

'target-table',

'columns list, separated by a comma, OPTIONAL',

'DELIMITER '','' CSV HEADER',

aws_commons.create_s3_uri(

'bucket-name',

'file-prefix',

'region-code'

)

);

When providing an empty string as column list, the command will try to map the file columns to the table columns.

The pitfalls

Constraints

Here are some constraints to keep in mind, not necessarily documented :

- the bucket name must not contain a period

.(I got this error message :S3 bucket names with a period (.) are not supported). Yeah I know we should havepath-styleS3 bucket, but hey, one has to deal with legacy right ?! ;cannot be used as a delimiter, but just so you know, this is not a PostgreSQL limitation as it accepts any character as a delimiter, as long as it’s single one-byte character;- just like the

COPYcommand from PostgreSQL only text, CSV, or binary format are supported;

Error handling

When an error occurs, the PG client doesn’t get a real, explicit error message. I mean, either for an unsupported bucket

name or a missing access right, we get the very same error message :

Unable to generate pre-signed url, look at engine log for details

We must go dig in the PG logs, from the RDS console to get an explicit error message and debug efficiently.

Conclusion

Once we get through the installation and understand the limits and pitfalls, this feature is super useful and easy to use.

Unfortunately (well, this is not really surprising) we can’t install the two extensions cannot on simple EC2. Life would be so nice if we could use AWS feature as we wish, where we wish !

So we get to choose:

- either we go for RDS, but we stick to the AWS handpicked extensions (exit timescale, citus or their columnar storage, … ),

- or we use other solutions, and we deprive ourselves of all the benefits of hosted / managed services.